Stable Difusion

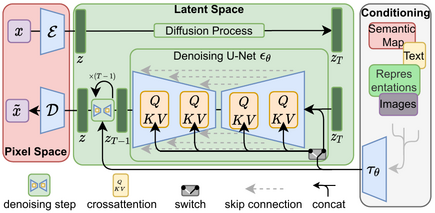

Stable Diffusion (SD) is a deep learning model that uses latent diffusion to generate high-quality images from text descriptions. This model allows for the transformation of text into complex visual representations. In addition to generating images from text prompts, SD can also perform other tasks such as image inpainting, outpainting, and text-to-image transformation.

The development of Stable Diffusion represents a significant advancement in the field of Generative AI, providing creators, designers, and developers with a powerful tool for image generation tasks. Advanced users can also train their own models based on the Stable Diffusion framework, fine-tuning it for specific use cases.

The Variational Autoencoder (VAE) in Stable Diffusion's architecture is used to learn the distribution of images within the training dataset. It works by encoding input images into a lower-dimensional latent space, capturing the essential features of the data, and then combining this with other techniques such as Generative Adversarial Networks (GANs) and autoregressive models to enhance data generation capabilities. The encoding process allows the model to create new images by sampling from the latent space, enabling it to learn how to reproduce the diversity and complexity of the input data. The effectiveness of VAE is crucial for generating high-quality and diverse images from textual descriptions.

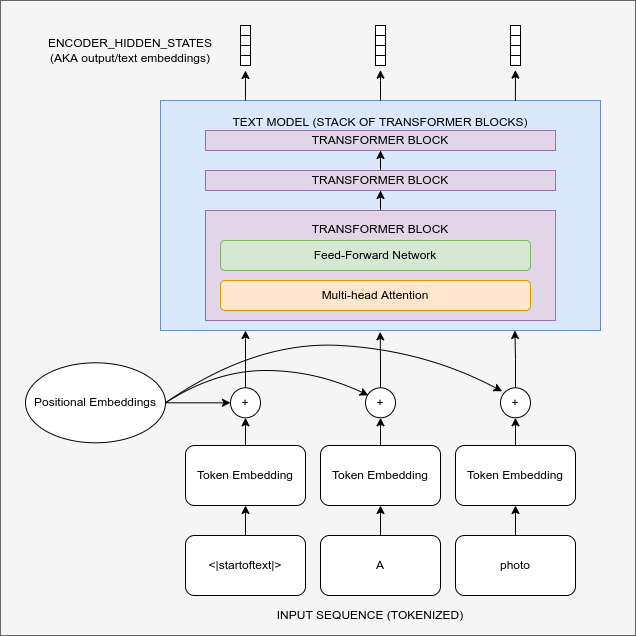

Text Conditioning involves embedding text descriptions into a format that the model can understand and use to guide the image generation process. This ensures that the generated images are not just random creations but are closely aligned with the themes, objects, and styles described in the input text.

Stable Diffusion extends beyond Text Conditioning by offering various other Conditioning types to enhance its image generation capabilities, including Super-Resolution, Inpainting, and Depth-to-Image. These additional Conditioning types broaden Stable Diffusion’s versatility and applications, catering to diverse needs ranging from creative art to scientific and technological uses.

Super-Resolution Conditioning: This type focuses on increasing the resolution of images. Instead of simply recreating images from textual descriptions, Super-Resolution Conditioning enables the model to generate images with higher detail compared to the original. This is particularly useful in scenarios requiring high clarity and detail, such as in medical imaging or scientific image analysis.

Inpainting Conditioning: This Conditioning allows the model to fill in missing regions within an image. Rather than generating a complete image from scratch, Inpainting Conditioning focuses on reconstructing areas that are lost or obscured. This is valuable for restoring and reconstructing damaged or incomplete images.

Depth-to-Image Conditioning: This type converts depth information of an image into a realistic image. Depth information usually indicates the distance from the camera to points in the image, and Depth-to-Image Conditioning uses this data to recreate the sense of depth and texture of objects in the image. This can be useful for analyzing and understanding the spatial structure of objects.

Why Train Your Own Model:

Although Stable Diffusion provides robust pre-trained models for generating images from text, training your own model offers several benefits. Fine-tuning a new model based on the Stable Diffusion framework allows for optimization and personalization of the image generation process to meet specific needs or styles.

Fine-tuning transforms a base model into a specialized expert, capable of accurately understanding and reproducing specific styles or themes. Creating well-tuned models involves advanced techniques such as LoRAs (Locally Receptive Attention) and LyCORIS, which further narrow the focus and enable the generation of images with specific styles.